In the latter case, results of offline evaluations were worth to be published, even if they contradict results of user studies and online evaluations. We discuss potential reasons for the non-predictive power of offline evaluations, and discuss whether results of offline evaluations might have some inherent value. The evaluations show that results from offline evaluations sometimes contradict results from online evaluations and user studies. The approaches differed by the features to utilize (terms or citations), by user model size, whether stop-words were removed, and several other factors. We implemented different content-based filtering approaches in the research-paper recommender system of Docear. offline evaluations, online evaluations, and user studies, in the context of research-paper recommender systems. In this paper, we examine and discuss the appropriateness of different evaluation methods, i.e. However, recommender-systems evaluation has received too little attention in the recommender-system community, in particular in the community of research paper recommender systems. The evaluation of recommender systems is key to the successful application of rec-ommender systems in practice. We discuss these findings and conclude that to ensure reproducibility, the recommender-system community needs to (1) survey other research fields and learn from them, (2) find a common understanding of reproducibility, (3) identify and understand the determinants that affect reproducibility, (4) conduct more comprehensive experiments (5) modernize publication practices, (6) foster the development and use of recommendation frameworks, and (7) establish best-practice guidelines for recommender-systems research. Since minor variations in approaches and scenarios can lead to significant changes in a recommendation approach's performance, ensuring reproducibility of experimental results is difficult. For instance, the optimal size of an algorithms' user model depended on users' age. Some of the determinants have interdependencies. Determinants we examined include user characteristics (gender and age), datasets, weighting schemes, the time at which recommendations were shown, and user-model size. We found several determinants that may contribute to the large discrepancies observed in recommendation effectiveness. For example, in one news-recommendation scenario, the performance of a content-based filtering approach was twice as high as the second-best approach, while in another scenario the same content-based filtering approach was the worst performing approach. The experiments show that there are large discrepancies in the effectiveness of identical recommendation approaches in only slightly different scenarios, as well as large discrepancies for slightly different approaches in identical scenarios. We conduct experiments using Plista's news recommender system, and Docear's research-paper recommender system. In this article, we examine the challenge of reproducibility in recommender-system research. However, comparing their effectiveness is a challenging task because evaluation results are rarely reproducible. Numerous recommendation approaches are in use today. The datasets are a unique source of information to enable, for instance, research on collaborative filtering, content-based filtering, and the use of reference-management and mind-mapping software. The four datasets contain metadata of 9.4 million academic articles, including 1.8 million articles publicly available on the Web the articles' citation network anonymized information on 8,059 Docear users information about the users' 52,202 mind-maps and personal libraries and details on the 308,146 recommendations that the recommender system delivered. It supports researchers and developers in building their own research paper recommender systems, and is, to the best of our knowledge, the most comprehensive architecture that has been released in this field. for crawling PDFs, generating user models, and calculating content-based recommendations. The architecture comprises of multiple components, e.g. In this paper, we introduce the architecture of the recommender system and four datasets.

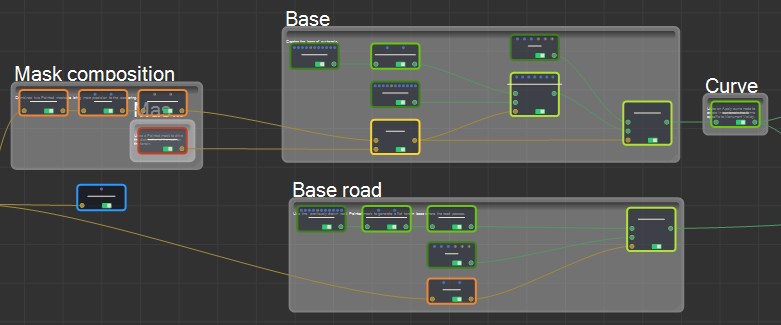

#Creating noddes docear software

In the past few years, we have developed a research paper recommender system for our reference management software Docear.

0 kommentar(er)

0 kommentar(er)